Pick and Place Challenge: MEAM 520 Final Project

The MEAM 520 (Introduction to Robotics) final project involved a “Pick and Place Challenge” which had student teams compete head-to-head by programming a 7-DOF Franka Emika arm to autonomously grasp, orient, and stack both stationary and moving blocks into towers on a goal platform.

The competition occurred in a shared environment between two teams. Each arm could access three platforms: one static platform with 4 randomly-dispersed blocks, one goal zone on which to stack blocks, and a shared central rotating platform with numerous blocks placed around the edge. Scoring scaled with type of block (static or moving), it’s altitude, and its orientation – with higher scores for dynamic blocks, higher stacks, and "upright" block pose.

My team advanced to the finals of this competition after three successful group stage rounds. In the finals, we adopted a more risky moving block grasping strategy and unfortunately exceeded some collision force thresholds. Thus, we hit the E-stop and accepted defeat. Still, this class was an incredible experience. I learned the theory and mathematics behind modeling robotic arms, forward kinematics, inverse kinematics, path planning algorithms, collision detection, offline computation, and more. Throughout this semester, and ahead of the competition, I then translated these lessons into program in Python capable of autonomously planning and executing the grasp, manipulate, and place task in real time.

My responsibilities in this project included: deriving the transformations and pipeline to convert raw simulated vision system data to usable block poses, developing static block stacking strategy, building offline IK solver as separate python class, and optimizing our final script and path planning to run as efficiently as possible.

The following videos show preliminary testing - the first time running our code outside of simulation. These were recorded prior to optimizing our program or implementing offline computation. Our final implementation was more streamlined.

Semi-Autonomous Mobile Robot: MEAM 510 Final Project

This project is the culmination of MEAM 510: Design of Mechatronic Systems in my first semester of the Robotics MSE program at Penn.

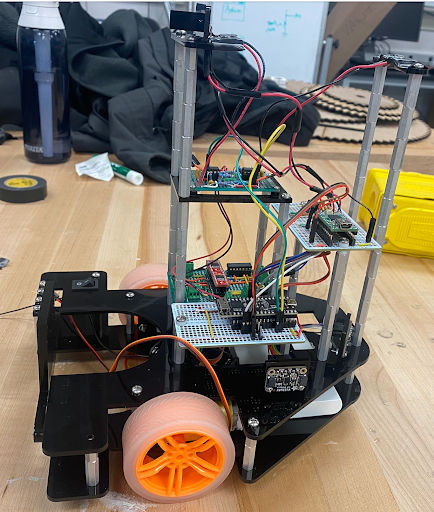

This mobile robot was built from scratch using ESP32 and Teensy 2.0 microcontrollers. It is designed to be piloted remotely over Wi-Fi and features three autonomous behaviors designed to perform well in the end-of-semester competition: wall-following, continuous localization and tracking in 2D space, and detection and tracking of a source of IR pulses at specific frequencies.

The end-of-semester competition required the semi-autonomous bots to move cans into their respective scoring zone and "steal" cans from the opposing side if desired. The pilots were located away from the arena and provided only a video feed of their side of the arena. Most of these cans broadcast X-Y position in the arena over Wi-Fi via UDP packets; however one can on each side of the field - the highly-valuable “beacon” - emitted only pulses from a ring of IR LEDs. The lack of visual on the opponent's half of the arena necessitated robust autonomous behavior implementations listed above.

To track our X-Y position on the field we created an IR photodiode circuit which could sense, condition, and amplify signal received from an HTC VIVE Base Station which was installed above the competition arena. We then duplicated this circuit and placed the two sensors at the front and back of the bot. This enabled us to find our orientation and then apply some simple vector math and proportional gain for steering to autonomously navigate to a given position (e.g. a can location). We also implemented range sensing to perform wall-following. Using two ToF sensors placed on the front and right sides of the bot, we were able to achieve autonomous counter-clockwise movement around the perimeter of the field – great for entering and remaining inside enemy territory. To track the beacons, we created another IR detection circuit with high amplification which could detect the beacon pulses at the expected frequencies. This required two IR phototransistors, each with a narrow cone of detection, such that we could drive the robot to spin about itself and move forward when both sensors read the appropriate pulse.

The motor circuit includes two wheels controlled by two H-Bridge drivers and an inverter which handles digital output from the ESP32 to specify direction. Motor speed is determined by a PWM signal whose duty cycle can be actively set by the driver. Ultimately, all functionality, including toggling autonomous behaviors, is controlled over Wi-Fi via an HTML interface.

My responsibilities in this project included: soldering all boards, designing the motor driver circuit, designing the beacon detection circuit, building the VIVE circuit, creating our autonomous X-Y navigation solution, and pair programming with a teammate to integrate all functionality on the ESP32. (NOTE: I elected to focus on electrical design and programming, as I felt already familiar with mechanical design)

For more information including circuit schematics, see our Final Project Report

The following videos demonstrate piloting the bot over Wi-Fi during the competition, and then final tests of the three primary autonomous behaviors.

AR Helmet Tracking – CIS 581 Final Project

Interested in pose estimation and facial tracking, I joined a team for the final project for CIS 581 (Computer Vision and Computational Photography) to develop a custom computer vision pipeline which incorporates a face tracking solution from Google's MediaPipe. Using the mesh generated from this facial detection, we perform point cloud registration to recover a least-squares approximation for the transformation which we then applied to a helmet 3D model. We then defined virtual camera parameters and automatically scaled the helmet mesh to align with the head of the subject. Finally, we superimposed the virtual camera result with the raw footage and rendered the result.

All Final Project files and a presentation can be found here: